You are all set to begin your learning path with this first stepping stone and foundational Essential course. In this course you are mentored through finely tailored course content to introduce the most popular tech stack – Big Data Aka Hadoop. It is equally important to learn the roles and responsibilities of a Data Engineer and it is part of this course curriculum. A clear introduction to Data Pipelines, Data Processing, HDFS, Resource Management, Data Access, Pipeline Automations is covered in this course.

You will be developing a real-world Data Engineering hands-on pipeline using one of the public datasets available along with the context of the business. For those who do not have enough resources to run installations on your system, hands-on session to setup the server on AWS Cloud is included as add-on in this course.

Pre-requisites (Free)

SQL Foundation

Shell/Bash Scripting for Beginners

System Requirements

CPU: Quad cores with i5 or better/M1

Memory: 16GB

OS: Windows/MacOS

Not to worry if you do not have enough capacity on your system, towards the end of this course you will be guided on procuring AWS Cloud server for your practice.

Mode Of Trainings

Online Interactive Sessions

Recorded Video Sessions – From the latest Online batch

Resources

Approximate number of sessions: 25 (Varies across the batches)

Lifetime access to the recorded videos will be given along with all supportive documents, logs, references and software’s if any.

Placements

With this course you are not ready yet for the market hunt. Complete the next Booster level course to be able to get our placement support.

Chapter 1: INTRODUCTION

- Responsibilities

- ETL/ELT

- Data Sources

- Batch Processing

- Stream Processing

- Data Lake

- Data Warehouse

- Data Marts

- Data Staging

- Data Integration

- Administration

- Data Optimizations

- Required Skills

Chapter 2: DATA PIPELINES

- Pipelines

- Automation & Scheduling

- Handling Exceptions

- Logging

Chapter 3: INSTALLING HADOOP

- Hadoop vs RDBMS

- System requirements

- Installation Modes

- Pre-requisites

- Installation

- Real-world Installations

- Questions & Answers

Chapter 4: HADOOP INTRODUCTION

- Hadoop Ecosystem

- Hadoop Distributions

- Evolution

- Storage

- Resources

- Processing

- Data Access

- Applications

Chapter 5: HDFS STORAGE

- HDFS Intro & Architecture

- Nodes & File System

- Data blocks

- Racks & Replications

- High Availability

- Space Reclamation

- Story in Short

- Hands-on

- Questions & Answers

Chapter 6: RESOURCE MANAGEMENT

- YARN Intro

- Architecture

- Resource Manager

- Resource Manager HA

- Node Manager

- Application Master & Containers

- Workflow

- Zookeeper

- Story in short

- Hands-on

Chapter 7: DATA PROCESSING

- Processing Engines

- MapReduce Intro

- MapReduce Architecture

- Mappers

- Reducers

- Spark Intro

- Spark Architecture

- Spark Workflow

- Spark Terms

- Spark vs MapReduce

- Story in short

Chapter 8: ACCESS DATA

- Hive Intro

- Hive Architecture

- Hive Hands-on

- Pig Intro

- Pig Architecture

- Pig Latin

- Pig Hands-on

Chapter 9: SCHEDULING JOBS

- Oozie Intro

- Architecture

- Scheduling

- Hands-on

Chapter 10: ESSENTIAL REALTIME PROJECT

- Business & Dataset

- Data Dictionary

- Dump Data

- Design Pipeline

- Pipeline Development

- Oozie Workflow

- Conclusion

Chapter 11: AWS CLOUD SETUP

- AWS Account

- EC2 Instance

- Setup & Login

- Port Forwarding

- Docker & Verify

Chapter 12: QUESTIONS & ANSWERS

Frequently Asked Questions (FAQs)

There are two modes of training. Online Instructor Led or Recorded Video Sessions. While you can purchase the later anytime, look out for the schedule on this page to take the first.

This is the foundational course towards becoming a Data Engineer and needs you to complete Booster as well to be market ready.

Basic SQL, Python & Shell script programming skills are the pre-requisites. Do not worry, we have free courses for you to enroll.

You will be part of the professional community and there will be assistance for your blockers.

After this foundational course, you will have to complete the next level to be market ready. You will be assisted and guided in profile building and mock interviews

Wondering what ORSKL can do for you?

Related Posts

- myadmin

- Data Analytics

- 1 Comments

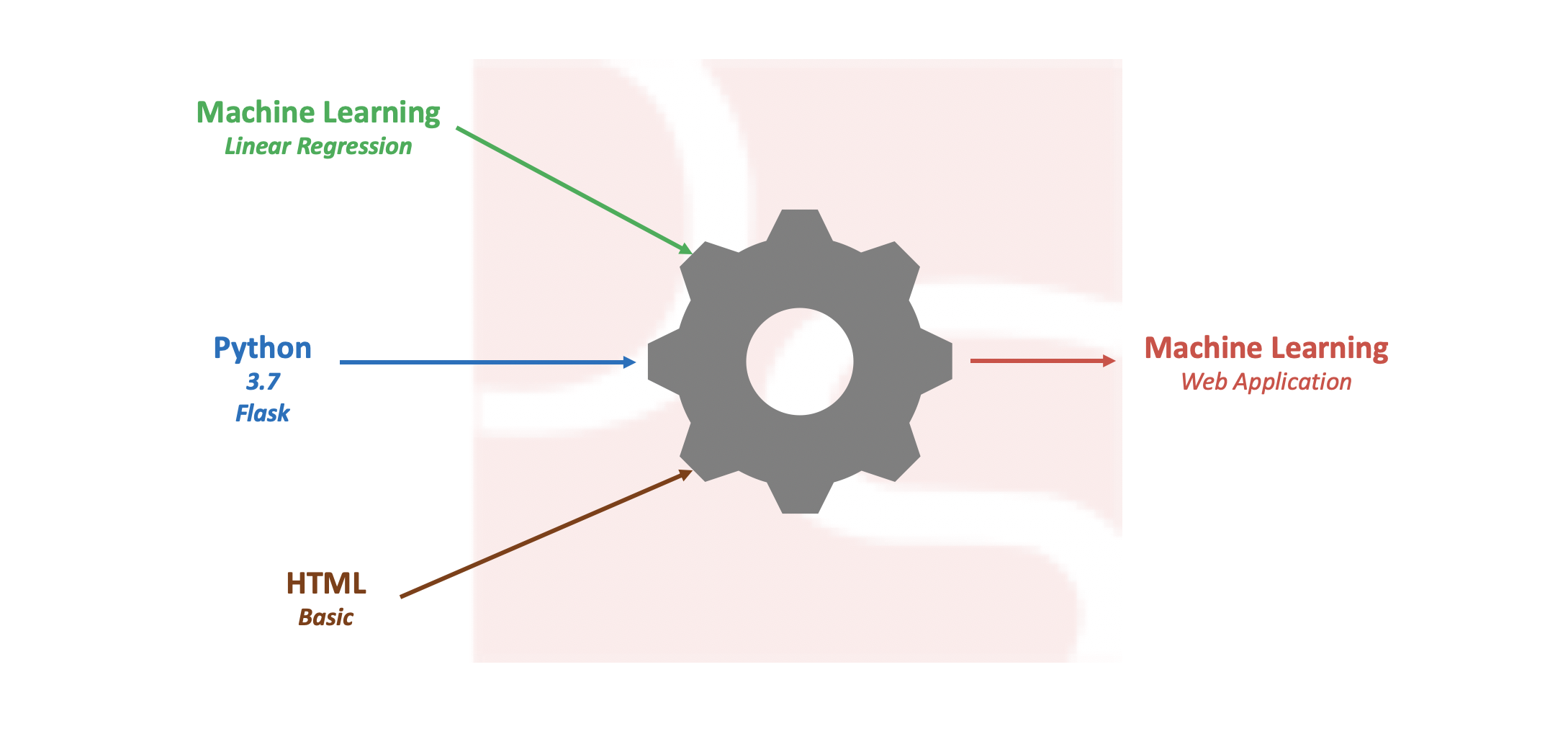

Build Simple Machine Learning Web Application using Python

Pre-processing data and developing efficient model on a given data set is one of the daily tasks of machine learning engineer with commonly used languages like Python or R. Not every machine learning engineer would get a chance or requirement to integrate the model into real time applications like web or mobile for end users […]

- myadmin

- Data Analytics

- 0 Comments

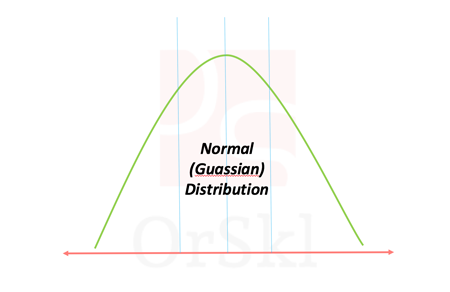

Ways to identify if data is Normally Distributed

Normal distribution also known as Gaussian distribution is one of the core probabilistic models to data scientists. Naturally occurring high volume data is approximated to follow normal distribution. According to Central limit theorem, large volume of data with multiple independent variables also assumed to follow normal distribution irrespective of their individual distributions. In reality we […]

- myadmin

- Data Analytics

- 0 Comments

Will highly correlated variables impact Linear Regression?

Linear regression is one of the basic and widely used machine learning algorithms in the area of data science and analytics for predictions. Data scientists will deal with huge dimensions of data and it will be quite challenging to build simplest linear model possible. In this process of building the model there are possibilities of […]

- myadmin

- General topics

- 14 Comments

Will Oracle 18c impact DBA roles in the market?

There has been a serious concern in the market with announcement of Oracle Autonomous database 18c release. Should this be considered as a threat to Oracle DBA’s roles in the market? Let us gather facts available on the Oracle web to understand what exactly this is going to be and focus on skill improvements accordingly. […]

- myadmin

- General topics

- 4 Comments

How Oracle database does instance recovery after failures?

INSTANCE RECOVERY – Oracle database have inherit feature of recovering from instance failures automatically. SMON is the background process which plays a key role in making this possible. Though this is an automatic process that runs after the instance faces a failure, it is very important for every DBA to understand how is it made […]

- myadmin

- Performance tuning

- 16 Comments

Why should we configure limits.conf for Oracle database?

Installing Oracle Database is a very common activity to every DBA. In this process, DBA would try to configure all the pre-requisites that Oracle installation document will guide, respective to the version and OS architecture. In which the very common configuration on UNIX platforms is setting up LIMITS.CONF file from /etc/security directory. But why should […]

- myadmin

- RMAN

- 9 Comments

Why RMAN needs REDO for Database Backups?

RMAN is one of the key important utility that every Oracle DBA is dependent on for regular day to day backup and restoration activities. It is proven to be the best utility for hot backups, in-consistent backups while database is running and processing user sessions. With all that known, as an Oracle DBA it will […]

- myadmin

- General topics

- 10 Comments

Will huge Consistent Reads floods BUFFER CACHE?

Oracle Database BUFFER CACHE is one of the core important architectural memory component which holds the copies of data blocks read from datafiles. In my journey of Oracle DBA this memory component played major role in handling Performance Tuning issues. In this Blog, I will demonstrate a case study and analyze the behavior of BUFFER […]

- myadmin

- Storage

- 11 Comments

Can a data BLOCK accommodate rows of distinct tables?

In Oracle database, data BLOCK is defined as the smallest storage unit in the data files. But, there are many more concepts run around the BLOCK architecture. One of them is to understand if a BLOCK can accommodate rows from distinct tables. In this article, we are going to arrive at the justifiable answer with […]

- myadmin

- Performance tuning

- 32 Comments

Can you really flush Oracle SHARED_POOL?

One of the major player in the SGA is SHARED_POOL, without which we can say that there are no query executions. During some performance tuning trials, you would have used ALTER SYSTEM command to flush out the contents in SHARED_POOL. Do you really know what exactly this command cleans out? As we know that internally […]